Anyone else mildly confused by all of the generative AI models available?

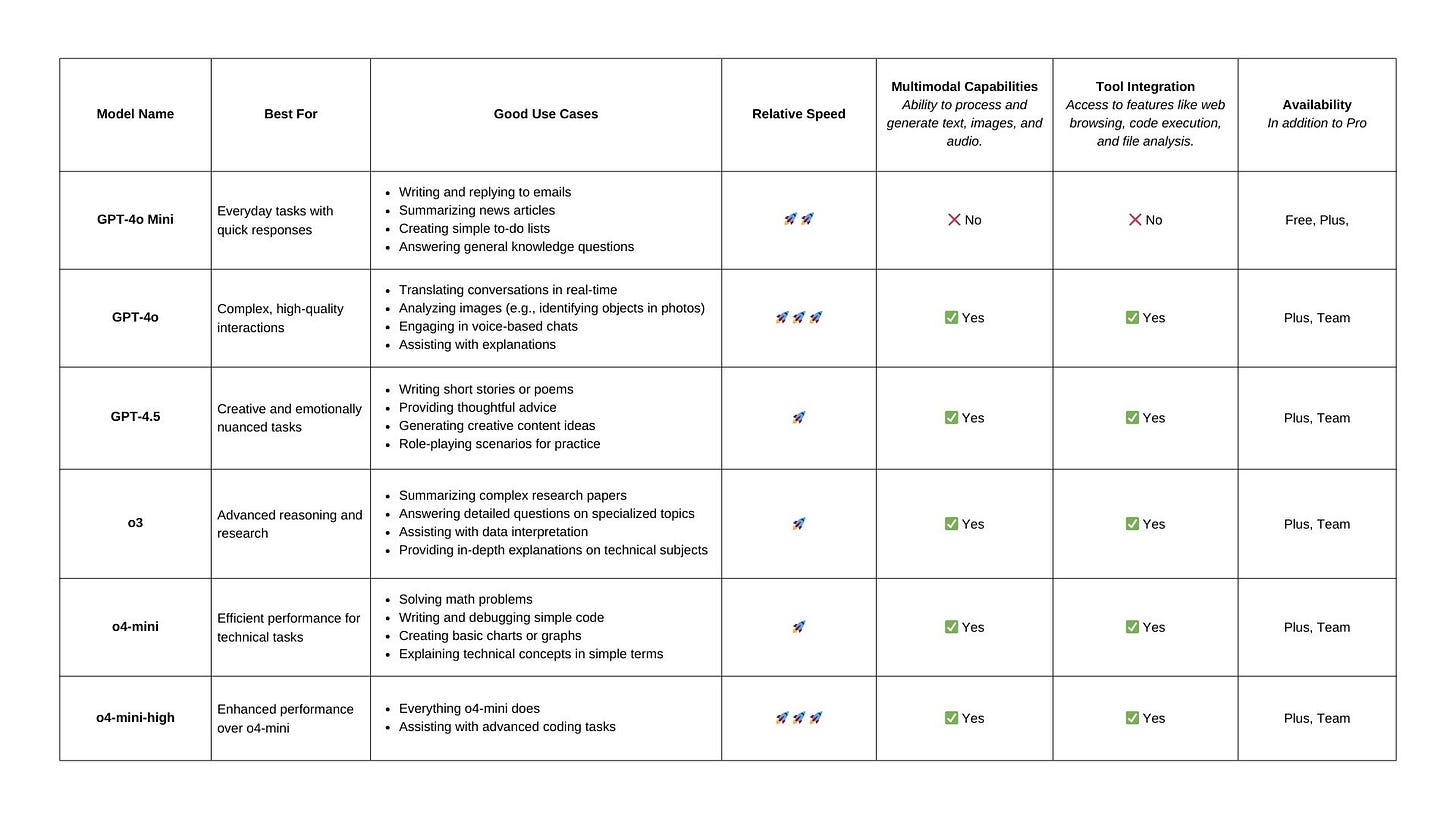

I don’t just mean ChatGPT vs. Claude vs. Gemini vs. Llama vs. Raccoon (I made that last one up). I mean within ChatGPT – if you have access to a paid version, there are so many models to choose from! They all seem fairly similar. Some models are just improvements over prior versions, while others are built differently to do different things. Confused? Me too.

There’s a decent explainer on OpenAI’s website. I used that and additional sources to create a model-selection chart for ChatGPT. It’s current as of 4/21/25. You can view the chart here and download a PDF below:

Download the PDF here:

For quick reference, I find myself typically using:

4o as the default (60% of the time)

I’ll switch to 4.5 any time I’m doing something creative (20% of the time)

I’ll fire up o3 for anything requiring heavier analysis (20% of the time)

This will change soon but hopefully it gives you something useful to grab ahold of!

That's it for this edition - please reach out if I can be at all helpful.

Be compassionate and intentional.

I’ve been experimenting with several models locally and the variability is intriguing. In some cases, efficacy seems to be inversely proportional to the size of the model. Ironically, for coding tasks, Gemma seems to provide more meta info than Llama :-)